Registration is required for this event and can only be done through this event page on steim.org.

Discounts available to HKU/Music Technology students and alumni.

This workshop will provide an introduction to the FTM&Co extensions for Max/MSP, covering the basic and the advanced use of the free IRCAM libraries FTM, MnM, Gabor, and CataRT for real-time musical and artistic applications.

The FTM&Co tools make it possible to program applications that work with sound, music and gestural data in a more complex and flexible way than what we are accustomed to from other tools operating in the time and frequency domain.

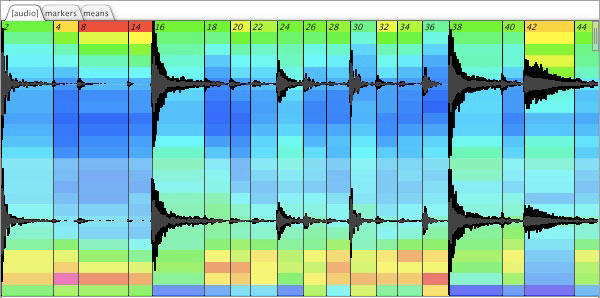

The basic idea of FTM is to extend the data types exchanged between the objects in Max/MSP. FTM adds complex data structures such as matrices, sequences, dictionaries, break point functions, and others that are helpful for the processing of music, sound and gestural (motion capture) data. In addition, FTM includes a toolkit of visualization and editor components, and operators (expressions and externals) for using these new data structures, together with more powerful file import/export objects for audio, MIDI, text, and SDIF (Sound Description Interchange Format - http://sdif.sourceforge.net/)

We will look at the parts and packages of FTM through practical examples of applications in the areas of sound analysis, transformation and synthesis, gesture following (through machine learning), and the live manipulation of musical scores.

Particular topics we will cover include:

- arbitrary-rate signal processing (Gabor)

- matrix operations

- statistics machine learning (MnM)

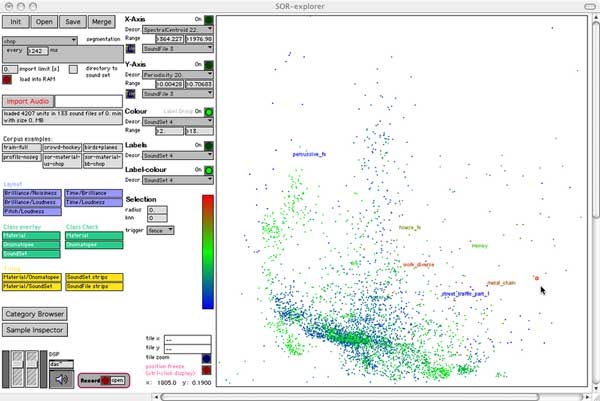

- corpus-based concatenative synthesis (CataRT)

- sound description data exchange (SDIF)

- using Jitter with FTM&Co for live and interactive visuals

The presented concepts will be tried and confirmed by applying them to programming exercises of real-time musical applications, and free experimentation.

DETAILS //

Date: October 24 - 27, 2011

Time: 10:00 - 16:00 each day

Cost: €200 (free for MT/HKU students, 50% discount for HKU alumni)

Location: STEIM, Achtergracht 19, Amsterdam

Number of participants: 15

Reserve a spot online through the registration link above no later than one week before the workshop!

PREREQUISITES / WHAT TO BRING //

A working knowledge of Max/MSP is required for this workshop. Knowledge of a programming or scripting language is also a big plus. Some of the data structure concepts in FTM&Co will be familiar to those with notions of object-oriented programming. Users of Matlab will feel right at home with MnM.

All attendees are expected to bring their own laptop computer with Max/MSP installed. For those without laptops, STEIM has a limited number of desktop computers available for use during the workshop. Please send an email to workshops[AT]steim[dot]nl if you need to use one of our computers.

TEACHER //

Diemo Schwarz is a researcher--developer in real-time applications of computers to music with the aim of improving musical interaction, notably sound analysis--synthesis, and interactive corpus-based concatenative synthesis.

Since 1997 at Ircam (Institut de Recherche et Coordination Acoustique--Musique) in Paris, France, he combined his studies of computer science and computational linguistics at the University of Stuttgart, Germany, with his interest in music, being an active performer and musician. He holds a PhD in computer science applied to music from the University of Paris, awarded in 2004 for the development of a new method of concatenative musical sound synthesis by unit selection from a large database. This work is continued in the CataRT application for real-time interactive corpus-based concatenative synthesis within Ircam`s Real-Time Music Interaction (IMTR) team.